How could ChatGPT be more humane?

Applying the principles of humane technology to the top Gen AI app

In November 2022, ChatGPT made waves around the world, serving as the starting point for millions of individuals exploring what AI can do. Yet, as we continue to integrate AI into our lives, the potential for ChatGPT to exemplify humane technology becomes more pressing and promising. As a community shaping the future of AI, OpenAI has a golden opportunity to align ChatGPT with principles that nurture humanity.

What is humane technology, you may ask? It means

teens and adults not taking their lives due to AI Chatbots

living phone-free when we’re connecting in person; being present in body and mind instead of “forever elsewhere”

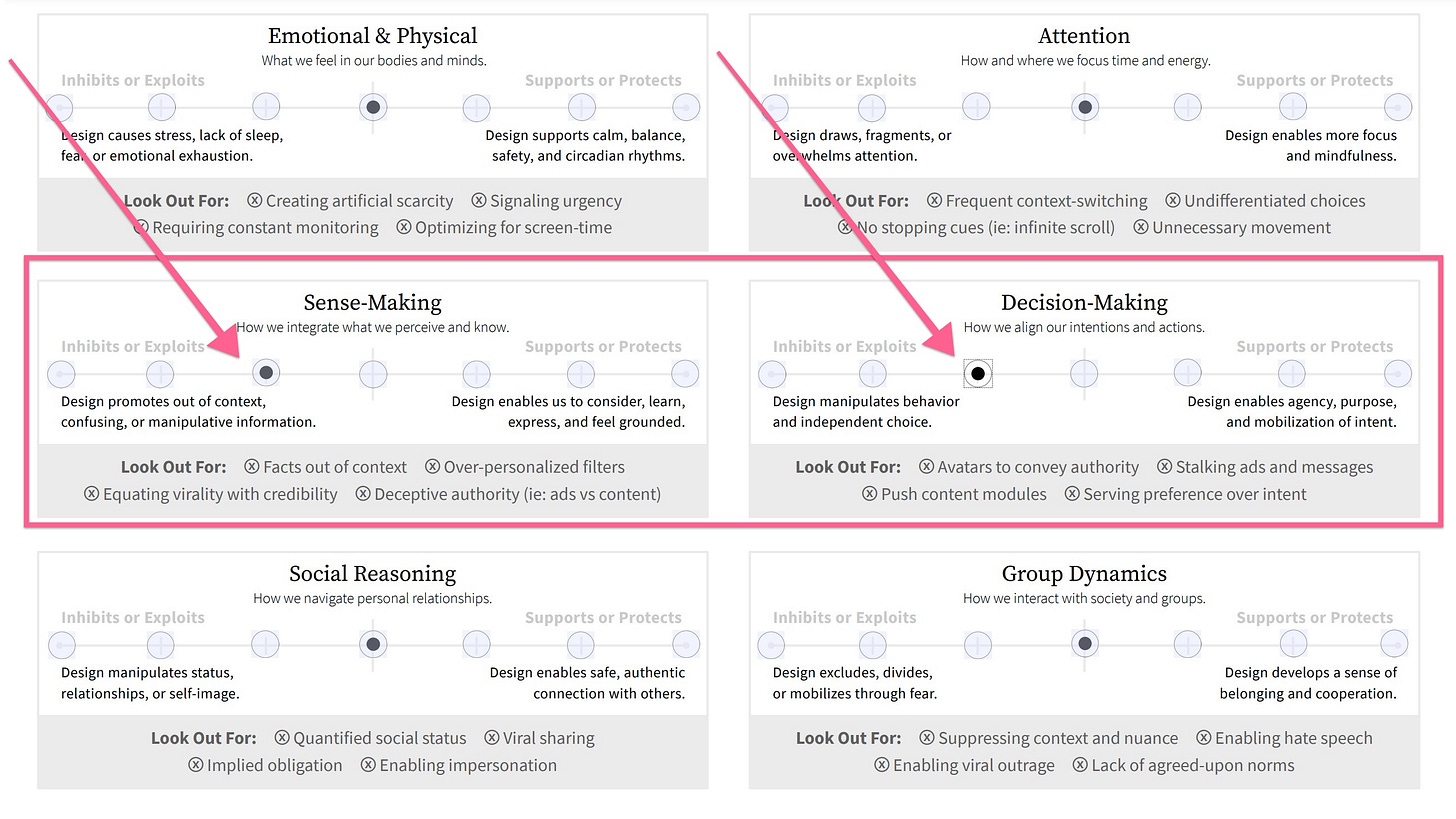

The central tenets, according to the Center for Humane Technology:

What if technology treated your attention and intention as sacred?

Technology is not neutral; we have a responsibility to do more than “give people what they want.”

Let’s evaluate ChatGPT according to these humane design principles, building on our MVP at my humane tech meetup this summer.

While I don’t see ChatGPT as supportive or protective —it’s neutral at best in most cases — two areas, “sense-making” and “decision-making” have the most room for improvement. Here’s how we’re defining those terms:

Sense-Making: Ensuring information is clear and verifiable.

Decision-Making: Enhancing user agency and understanding.

Just as discernment with social media is challenging, the same is true for Generative AI since, by its nature, the latter acts like an eager puppy, aiming to please. Beyond a warning in small print at the bottom of the screen, how can ChatGPT teach users about 1) the source of the information, and 2) how much we should trust it?

I worked with product designers from Meta, Apple, and elsewhere, who are interested in human technology to explore this question. Our initial thoughts:

Features Implemented:

1: Educational Button: Adding a "Learn more" button to explain how AI works, demystifying the technology and making it less of a black box.

2: Teaching Prompts: Providing resources on how to prompt to help users formulate better questions and reduce frustration.

3: Help Center: Providing assistance to users who need a helping hand

4: Confidence Scale: Introducing color-coded responses to indicate the AI's confidence level, helping users gauge the reliability of the information.

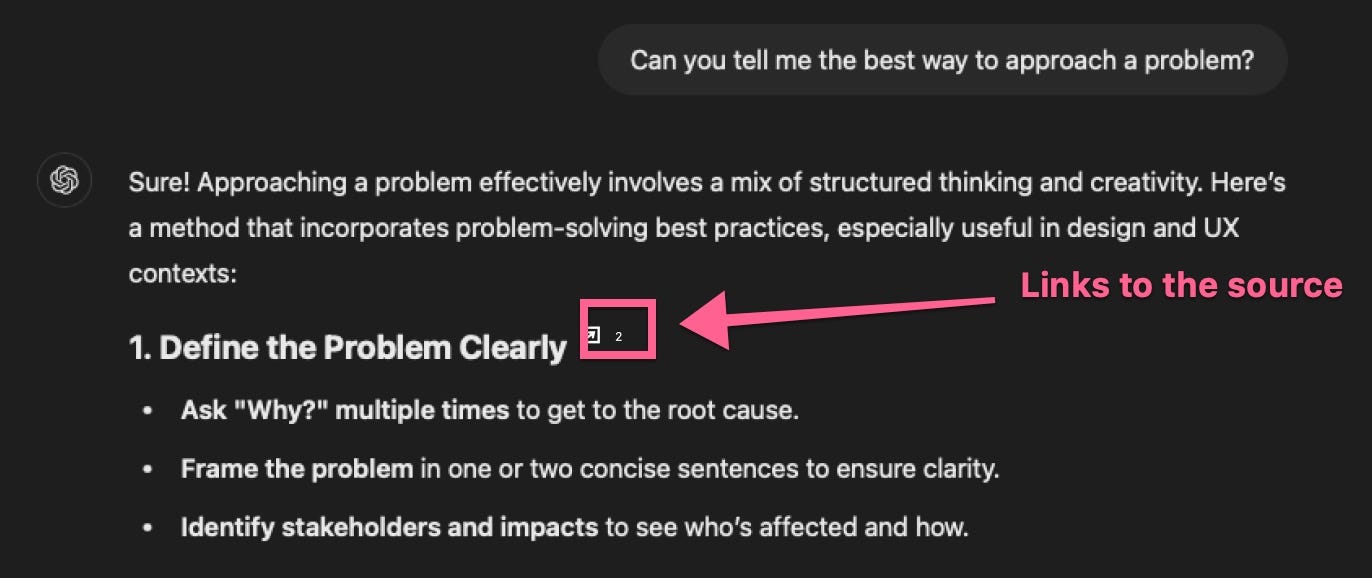

5: Check sources: Implementing a "Question Mark" button that allows users to fact-check and see the sources of the information provided, promoting transparency and trust.

Here’s how sourcing could look — acknowledging that ChatGPT is already doing this for their newly released web search capability:

Broadening Horizons: Rewilding AI

As ChatGPT begins to supplant traditional search engines, we have to consider its role in a diverse digital ecosystem. To start, let’s take a look at the hierarchy of knowledge. Pre-internet, our knowledge sources were limited and controlled: Encyclopedia Brittanica, the library, whatever we were taught in school, plus any cultural wisdom our elders passed on. Then, the internet made anything and everything accessible, and Google was our window to the world. As Google’s Search UI has become ever more littered with ads, finding value is that much harder. Separately: if your web page doesn’t appear on the first results page, for all practical purposes, it doesn’t exist (and without sourcing, nary a web page will “exist” with AI). Concurrently, social media has overwhelmed us with conspiracy theories alongside mainstream media, blurring the lines and playing with the concept of truth. Now, with the training data for GenAI as the stand-in for all knowledge, our tunnel vision has narrowed even further.

As opposed to operating from purely Western, Educated, Industrialized, Rich, and Democratic (WEIRD) values, ChatGPT has the opportunity to surface other cultures and ways of being. The need to rewild the internet, moving from monoculture to polyculture, is true of the training data for Large Language Models (LLMs) as well. For instance, Hawaii natives have created a Custom GPT trained on Hawaiian cultural wisdom, EKGPT, which demonstrates a more community-oriented approach to problem-solving. AI's training data needs to reflect multiple cultures and perspectives. To be clear, OpenAI has the opportunity to pioneer a polycultural AI landscape.

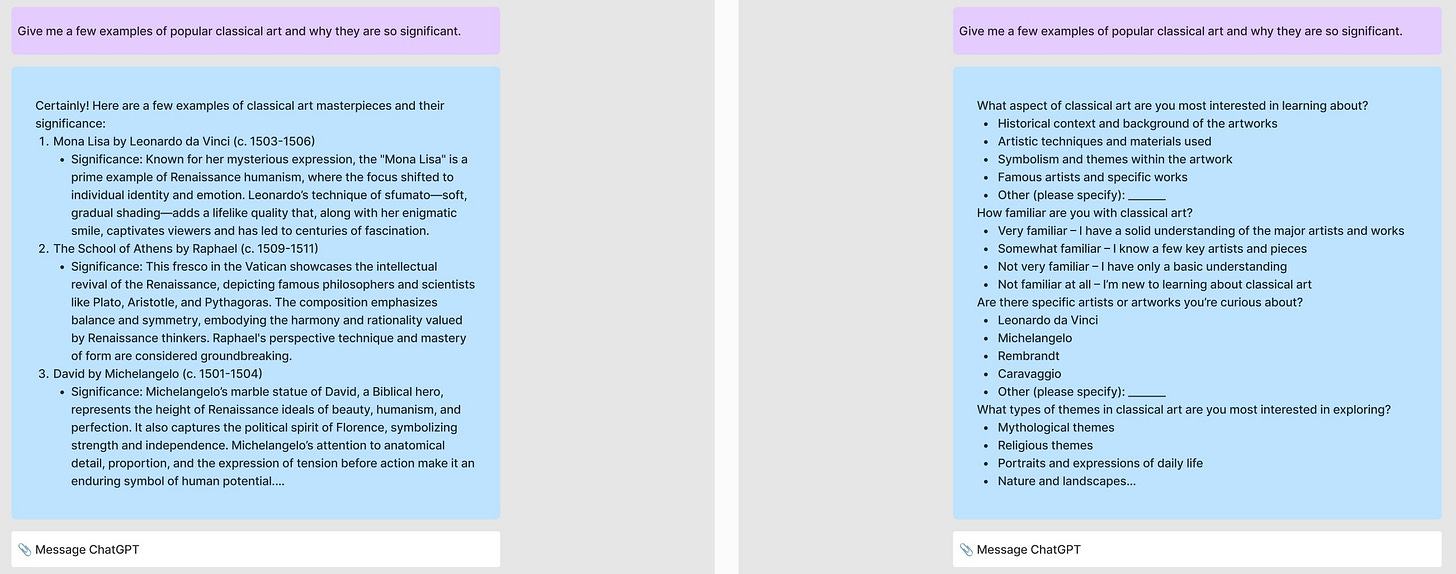

One way we could address these challenges is to guide users in their prompting. This could look like asking a few “survey questions” up front, as you see on the right:

I explored prompting guidance from a conversational perspective, which could be a “No assumptions” feature you can toggle on or off, depending on the question you’re asking:

Envisioning a Humane ChatGPT

Picture ChatGPT encouraging users, reassuring them about exploring new strategies, and fostering collaboration by suggesting real-world discussions. Imagine an AI that offers communication reflective of user diversity and gracefully handles imperfections. Harnessing AI for good includes giving users control over data and interaction preferences, an aspect OpenAI can lead with passion and precision.

Product designer Kabeer Andrabi provides more detail on this approach:

Normalize Imperfections and Social Aspects: ChatGPT could address areas where users may feel hesitant. For example, "It’s normal to feel unsure when exploring new strategies—everyone’s learning as they go! Let's tackle this together, and I'll walk you through each step."

Encourage Goal Alignment with Real-Life Engagement: For users working on personal growth or skills, the AI might suggest collaborative elements. "Why not discuss this idea with a colleague? I can help you summarize your main points."

Generating personas that represent marginalized groups and users with accessibility needs

For a longer paragraph, asking a user - would you like me to tell you more about it

Adapt communication style to match user's personality and needs

Clearly distinguish between factual information and AI-generated opinions or speculations.

User Control: Give users more control over their interaction preferences and data usage.

Well-being Focus: Integrate features that actively promote user well-being, such as mood tracking or stress-reduction techniques. You could start the session by asking the user how they’re feeling

OpenAI's Role in the Humane Tech Movement

For OpenAI, integrating humane principles from the outset means more than future-proofing against criticism. It's about pioneering ethical tech that respects and uplifts its users. OpenAI product people have the skills and creativity to enhance ChatGPT, making it a cornerstone of humane AI. Joining this movement aligns with OpenAI's mission to ensure AI benefits humanity, bridging innovation with responsibility.

Call to Action:

To the dedicated folks at OpenAI, this is your invitation to shape a more humane digital future. Explore diverse knowledge systems, enhance transparency, and elevate user agency. Harness your talents to ensure ChatGPT strengthens humanity rather than undermines it. Let's collectively forge an ethical AI landscape we're proud of. Together, let's learn, adapt, and create AI that's not just intelligent, but wise.